Call for Papers: Special Issue

MISQ Special Issue on Registered Reports

Special Issue Editors

Shuk Ying Ho, Australian National University

Jan Recker, University of Hamburg

Chee-Wee Tan, Copenhagen Business School

Anthony Vance, Virginia Tech

Han Zhang, Georgia Tech

misqregisteredreport@gmail.com

Introductory Workshop (optional): February 20, 2023 (registrations due February 4)

Proposal Deadline: July 1, 2023

Stage 1 Submission Deadline: October 1, 2023

A PDF of this page can be found here.

Background for the Special Issue

An irony of science is that, despite our devotion to evidence, there is little evidence underpinning many aspects of the journal review process (Linkov et al., 2006; Tennant & Ross-Hellauer 2020). As part of our commitment to continually improving our review processes at MIS Quarterly (MISQ) (Lee, 1999; Saunders, 2005; Straub, 2008; Rai, 2016), this special issue aims to obtain evidence regarding the merits of a recent innovation in the review process—registered reports.

While the registered report model is relatively new, it has been adopted by over 200 journals across the sciences (Chambers, 2019). Business and Information Systems Engineering was the first (and so far, lone) IS journal to adopt this model (Weinhardt et al., 2019). Other early adopters across the business fields include Journal of Accounting Research (JAR), Academy of Management Discoveries, and Leadership Quarterly.

Our goal in this special issue is to learn if the registered report model should be available as an ongoing model for MISQ, as a supplement to our existing options. While we see a lot of promise in the model, we plan to test it first to learn if/how it can best suit our journal.

What is the Registered Report Model?

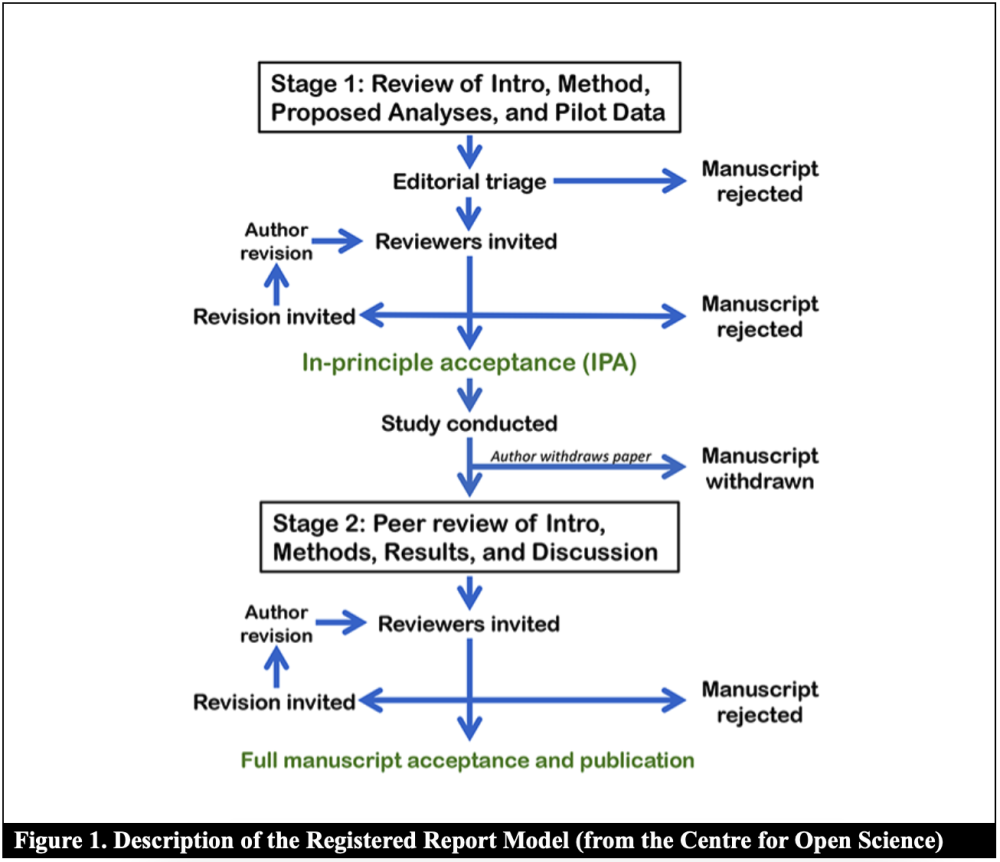

As Figure 1 shows, the registered report model involves a 2-stage ‘acceptance’ process: a conditional acceptance before data collection, followed by an acceptance if the authors follow the agreed plan.

Compared to a normal review process, there are two main differences:

- Pre/pilot data may (or may not) have been collected before conditional acceptance, but the main data will not have been collected prior to the conditional acceptance being offered.

- The paper cannot be rejected due to its results.

Please note that registered reports are not the same as ‘pre-registrations.’ Pre-registrations refer to the activity of registering one’s hypotheses (and other details) prior to testing them. Registered reports go a step further than pre-registrations in that the paper is submitted to the journal before the final data has even been collected.

Purported Benefits of the Registered Report Model

Early tests of the registered report model across the sciences are yielding positive results (Chambers & Tzavella, 2022; Scheel et al., 2021; Soderberg et al., 2021), especially on the following dimensions:

Bias reduction: Registered reports were invented to overcome biases that can occur in review processes when editors/reviewers prefer to see results in a particular direction. For instance, editors/reviewers tend to reject papers with insignificant results, mixed results, or results that run counter to their desires. Registered reports facilitate the publication of such papers. Registered reports can also help reduce biases that affect authors because it frees authors from seeking results they may think they need to obtain to pass the review process.

Investment motivation: It often takes substantial time and resources to collect an excellent data set. This can be risky if it may take multiple years to get a paper through the review process, only to get a late-round rejection. On the other hand, if you have received conditional acceptance on your paper before collecting the data, this transfers some of the risk from the authors to the editors. Authors are likely to be much more motivated to undertake the effort/time/cost to get the data if they know that their paper will not get rejected. This should increase our chances of getting higher-investment, higher-impact papers into MISQ.

Review Criteria for the Special Issue

Submissions to this special issue will be reviewed in a three-step process. Each step will focus on different criteria, which are outlined below:

Step 1: Submission Proposals

The senior editors will examine the submission to ensure it matches our criteria for suitability, which are:

- Does the paper follow a hypothetico-deductive model of science? Does the paper seek to contribute by proposing predictions/hypotheses and testing them against empirical data?

- Note: Researchers may use the logical of hypothesis testing with many types of data (e.g., qualitative or quantitative, and primary or secondary data). The approach can also be used for many genres of IS research, including behavioral, organizational, design science, and economics. The use of hypothesis-testing also does not necessitate the use of null hypothesis significance testing, e.g., Bayesian methods of analysis can also be used.

- Is the paper likely to benefit from a registered report model of peer-review compared to a regular peer-review process? In particular:

- Bias reduction: Could the research potentially lead to results that contradict expectations and, thereby, trigger biases in a traditional review process (e.g., biases against controversial or null results)?

- Investment motivation: Could the research involve substantial investments (e.g., time/funds) that a traditional review process may disincentivise? That is, is it reasonable to assume that a research team may put greater effort into a risky empirical project if they have the review team’s support for the paper irrespective of the results?

Step 2: Stage 1 Review (prior to final results)

Next, the submission will be examined based on criteria for conditional (results-independent) acceptance:

- Does the paper make hypotheses/predictions that, irrespective of the results, offer the potential for a significant contribution to research and practice?

- Does the paper propose a research design and analytical approach that, if followed, would allow the purported hypotheses/predictions to be tested with an acceptable degree of rigor?

Note: Some pre- or pilot-test results might be included at this stage, to show feasibility of the approach (e.g., for manipulation checks or power calculations), but they are not mandatory.

Step 3: Stage 2 Review (paper with results)

Lastly, the submission will be examined based on criteria for acceptance, which are:

- Did the paper implement the accepted research design and analytical approach?

- Is the paper written to acceptance standards?

- Additional analyses (optional): While the realization of the results will not affect acceptance, it might result in requests for additional analysis from the editorial/review team to enhance the study’s contribution. Acceptance of the paper does not depend on the pattern of results reported – it only requires the authors to conduct and report the additional analyses.

Process and Timeline (subject to change)

All submission proposals must be submitted via email via the proposal website: https://umn.qualtrics.com/jfe/form/SV_41wvfmPquoQ6MbY All papers moving forward to Stage 1 must then be submitted in the Special Issue category through MISQ’s ScholarOne submission site located at https://mc.manuscriptcentral.com/misq.

All submissions must adhere to MISQ submission instructions (see https://misq.org/instructions/), with a maximum length of 20 pages for proposals, 30 pages for Stage 1 submission (i.e., the length of an MISQ Research Note), and 55 pages for Stage 2 submissions (i.e., the length of an MISQ Research Article).

Optional Introductory Workshop: Registration due February 4, 2023; Workshop on February 20, 2023

Interested authors have the option to attend a workshop hosted by the Special Issue Senior Editors that will briefly describe the aims and process of the Special Issue, as well as answer any questions. To register, please sign up here: https://umn.qualtrics.com/jfe/form/SV_0Do5qQbcLpoaXPw

Proposal Submission: Due July 1, 2023

As this Special Issue is offered to test the Registered Report model, the SEs aim to only consider the minimum number of submissions. Accordingly, the team seeks 20-30 proposal submissions in total. Proposals must be submitted to the Special Issue SEs at https://umn.qualtrics.com/jfe/form/SV_41wvfmPquoQ6MbY by July 1, 2023. The proposals should briefly outline the paper’s motivation, background, theory/hypotheses, method, and expected contribution to the field. Proposals must address the criteria outlined in Step 1.

Proposals will be screened by the Special Issue Senior Editor and up to one (1) guest Associate Editor from the current MISQ Editorial Board based on topic, theory, and method expertise. The Special Issue Senior Editors will seek to identify up to 30 papers with the remaining papers desk rejected. Papers receiving a desk rejection will only receive high-level feedback based on lack of fit with the Special Issue goals. Authors of papers moving to the next stage in the process will receive feedback on how to strengthen the paper prior to submitting through MISQ’s ScholarOne submission site.

Stage 1 Submission: Due October 1, 2023

Papers moving to this stage should provide a refined version of the material in the proposal and can be a maximum length of 30 pages. Each submission will be assigned to two (2) Special Issue Senior Editors based on topic, theory, and method fit. The submission will be screened, and if deemed ready, will be sent out for review focusing on the criteria in Step 2. Submissions will receive a rejection, revision, or conditional acceptance at this stage. Multiple revisions may be required before a decision is rendered.

Remember: the final data is not collected at this stage. Instead, the research design and approach(es) for data collection and analysis must be described in enough detail to allow the review team to judge the expected contribution if the authors carried out the proposal plan.

Special Issue Workshop for Stage 1 Authors: Early-Mid January 2024

A Special Issue workshop will be held to provide advice to authors of papers still progressing in the review process. Advice will focus on passing Stage 1 and key considerations to move from Stage 1 to Stage 2.

Next Steps in the Review Process

In most Special Issues, papers move through (or exit) the review process at the same time. However, this is less practical with registered reports, as papers may receive differing decisions during Stage 1. Even though the papers will not necessarily move through the review process together, maximum times are set for each stage to facilitate our test of the Registered Report model.

To continue in the review process, a paper must reach conditional acceptance at Stage 1 within 1yr of first round submission (i.e., by Oct 1, 2024) and must be submitted for Stage 2 review within nine months after receiving a Stage 1 conditional acceptance. Nine months are offered (rather than the normal six months) to allow for higher-investment data collection. Authors will not be offered extensions beyond these time limits.

Special Issue Publication, Editorial, and Showcase Event: To Be Determined

At the conclusion of the Special Issue, the Special Issue Editors will host a showcase event to promote the accepted papers and share learnings from the test, as well as describe if/how the model will be incorporated at MISQ in the future. They will also write an editorial on the process and key lessons learned.

Key Dates

- Introductory workshop: February 20, 2023

- Proposal submissions due via email: July 1, 2023

- Proposal submissions decisions expected: August 1, 2023

- Stage 1 submissions due via ScholarOne: October 1, 2023

- Stage 1 initial decisions expected: December 15, 2023

- Special Issue workshop for Stage 1 authors: Early-Mid January 2024

- Stage 1 submissions must receive a conditional acceptance no later than October 1, 2024

- Stage 2 submissions due within 9 months of Stage 1 decision

- Special Issue Publication, Editorial, and Showcase Event: TBD

Frequently Asked Questions (FAQ)

Do the stages of the review process refer to rounds? Is it a 2-round review process?

No. Multiple rounds may occur at each stage of the review process. We do not constrain the process to any number of revisions, just as we do not constrain the number of revisions in the normal review process. Editors will limit the number of revisions to the extent possible/reasonable. Experience at other journals suggest that, compared to a normal review process, the Stage 1 review process could be more intensive than normal because the review team needs assurance that the research design and analytical approach will offer a sufficient contribution. The payoff for extra work at the front-end is that the Stage 2 review should be faster.

Can all papers using a hypothesis-testing approach at MISQ request to use the registered report model of peer review rather than using the traditional review process?

Please see the criteria for suitability. We do not envisage that all hypothesis-testing papers will be suitable for the registered report model. Rather, they are most suitable when they are associated with the criteria of bias reduction and investment motivation.

As an author, what prevents me from “gaming” the system (e.g., by collecting the data beforehand and submitting the research as if I did not know the results)?

The Stage 1 process can include several rounds during where you may be asked to change your data collection and/or analysis strategy. As a result, relying on pre-collected and pre-analysed data would be unlikely to succeed. Such attempts to “game” the review process would also breach publication ethics.

Does the focus on hypothesis testing research mean that no exploratory analysis is possible in these papers?

No. Exploratory analyses could be highly applicable in papers submitted to the registered report review process. While exploratory research is not a required element of hypothesis-testing research, it can complement it (e.g., by clarifying the boundaries within which the hypotheses are supported/refuted). While the relevance of exploratory analyses will depend on the specific paper, such analyses could be a consideration during both stages of the review process. In Stage 1, the authors may propose (and the review team may assess and suggest revisions to) a set of exploratory analyses. In Stage 2, the review team may request additional analyses to enhance the paper’s contribution, and the authors may propose additional analyses in consultation with the Senior Editors (also see next question).

After the Stage 1 review process, is the process absolutely rigid so that authors and editors cannot deviate from the agreed process at all?

No. We propose to balance strictness with some flexibility, following recommendations from the earlier test at JAR. They found it helped to use a model that still demanded detailed transparency about what the authors planned to do; then, instead of an unconditional promise of acceptance (i.e., the paper is accepted at Stage 2 if the Stage 1 plan is followed), it’s a weaker promise of result-independent acceptance. This allows authors and editors to instigate changes based on the results; the paper cannot be rejected at Stage 2, if the authors conduct the tests outlined in Stage 1. The authors may decide to conduct additional tests in Stage 2 beyond those specified in Stage 1 while the Special Issue Senior Editors may also place conditions on Stage 2 acceptance regarding additional tests. In the latter case, it is only the conduct of the additional analyses that is required for acceptance, not a particular pattern of results. At JAR, they referred to this process as balancing flexibility and brittleness in planned analyses (Bloomfield et al., 2018), which is a balance that we see as valuable, too.

References

Bloomfield, R., Rennekamp, K., & Steenhoven, B. (2018). No system is perfect: Understanding how registration-based editorial processes affect reproducibility and investment in research quality. Journal of Accounting Research, 56(2), 313–362. https://doi.org/10.1111/1475-679X.12208

Chambers, C. D. (2019). What’s next for registered reports? Nature, 573, 187–189. https://doi.org/10.1038/d41586-019-02674-6

Chambers, C. D., & Tzavella, L. (2022). The past, present, and future of registered reports. Nature Human Behaviour, 6(1), 29–42.

Lee, A. S. (1999). Editor’s comments: The role of information technology in reviewing and publishing manuscripts at MIS Quarterly. MIS Quarterly, 23(4), iv-ix.

Linkov, F., Lovalekar, M., & LaPorte, R. (2006). Scientific journals are ‘faith based’: Is there science behind peer review? Journal of the Royal Society of Medicine, 99(12), 596–598.

Rai, A. (2016). Editor’s comments: Writing a virtuous review. MIS Quarterly, 49(3), iii–x.

Saunders, C. (2005). Editor’s comments: From the trenches: Thoughts on developmental reviewing. MIS Quarterly, 29(2), iii–xii.

Scheel, A. M., Schijen, M. R. M. J., & Lakens, D. (2021). An excess of positive results: Comparing the standard psychology literature with registered reports. Advances in Methods and Practices in Psychological Science, 4(2), 1–12.

Soderberg, C. K., Errington, T. M., Schiavone, S. R., Bottesini, J., Thorn, F. S., Vazire, S., Esterling, K. M., & Nosek, B. A. (2021). Initial evidence of research quality of registered reports compared with the standard publishing model. Nature

Human Behavior, 5(8), 990–997. https://doi.org/10.1038/s41562-021-01142-4

Straub, D. W. (2008). Editor’s comments: Type II reviewing errors and the search for exciting papers. MIS Quarterly, 32(2), v–x.

Tennant, J.P. & Ross-Hellauer, T. (2020). The limitations to our understanding of peer review. Research Integrity and Peer Review, 5(6), 1–14. https://doi.org/10.1186/s41073-020-00092-1

Weinhardt, C., van der Aalst, W. M. P., & Hinz, O. (2019). Introducing registered reports to the information systems community. Business & Information Systems Engineering, 61(4), 381–384.